“o1: A Technical Primer” by Jesse Hoogland

Manage episode 454951406 series 3364758

Kandungan disediakan oleh LessWrong. Semua kandungan podcast termasuk episod, grafik dan perihalan podcast dimuat naik dan disediakan terus oleh LessWrong atau rakan kongsi platform podcast mereka. Jika anda percaya seseorang menggunakan karya berhak cipta anda tanpa kebenaran anda, anda boleh mengikuti proses yang digariskan di sini https://ms.player.fm/legal.

TL;DR: In September 2024, OpenAI released o1, its first "reasoning model". This model exhibits remarkable test-time scaling laws, which complete a missing piece of the Bitter Lesson and open up a new axis for scaling compute. Following Rush and Ritter (2024) and Brown (2024a, 2024b), I explore four hypotheses for how o1 works and discuss some implications for future scaling and recursive self-improvement.

The Bitter Lesson(s)

The Bitter Lesson is that "general methods that leverage computation are ultimately the most effective, and by a large margin." After a decade of scaling pretraining, it's easy to forget this lesson is not just about learning; it's also about search.

OpenAI didn't forget. Their new "reasoning model" o1 has figured out how to scale search during inference time. This does not use explicit search algorithms. Instead, o1 is trained via RL to get better at implicit search via chain of thought [...]

---

Outline:

(00:40) The Bitter Lesson(s)

(01:56) What we know about o1

(02:09) What OpenAI has told us

(03:26) What OpenAI has showed us

(04:29) Proto-o1: Chain of Thought

(04:41) In-Context Learning

(05:14) Thinking Step-by-Step

(06:02) Majority Vote

(06:47) o1: Four Hypotheses

(08:57) 1. Filter: Guess + Check

(09:50) 2. Evaluation: Process Rewards

(11:29) 3. Guidance: Search / AlphaZero

(13:00) 4. Combination: Learning to Correct

(14:23) Post-o1: (Recursive) Self-Improvement

(16:43) Outlook

---

First published:

December 9th, 2024

Source:

https://www.lesswrong.com/posts/byNYzsfFmb2TpYFPW/o1-a-technical-primer

---

Narrated by TYPE III AUDIO.

---

…

continue reading

The Bitter Lesson(s)

The Bitter Lesson is that "general methods that leverage computation are ultimately the most effective, and by a large margin." After a decade of scaling pretraining, it's easy to forget this lesson is not just about learning; it's also about search.

OpenAI didn't forget. Their new "reasoning model" o1 has figured out how to scale search during inference time. This does not use explicit search algorithms. Instead, o1 is trained via RL to get better at implicit search via chain of thought [...]

---

Outline:

(00:40) The Bitter Lesson(s)

(01:56) What we know about o1

(02:09) What OpenAI has told us

(03:26) What OpenAI has showed us

(04:29) Proto-o1: Chain of Thought

(04:41) In-Context Learning

(05:14) Thinking Step-by-Step

(06:02) Majority Vote

(06:47) o1: Four Hypotheses

(08:57) 1. Filter: Guess + Check

(09:50) 2. Evaluation: Process Rewards

(11:29) 3. Guidance: Search / AlphaZero

(13:00) 4. Combination: Learning to Correct

(14:23) Post-o1: (Recursive) Self-Improvement

(16:43) Outlook

---

First published:

December 9th, 2024

Source:

https://www.lesswrong.com/posts/byNYzsfFmb2TpYFPW/o1-a-technical-primer

---

Narrated by TYPE III AUDIO.

---

Images from the article:

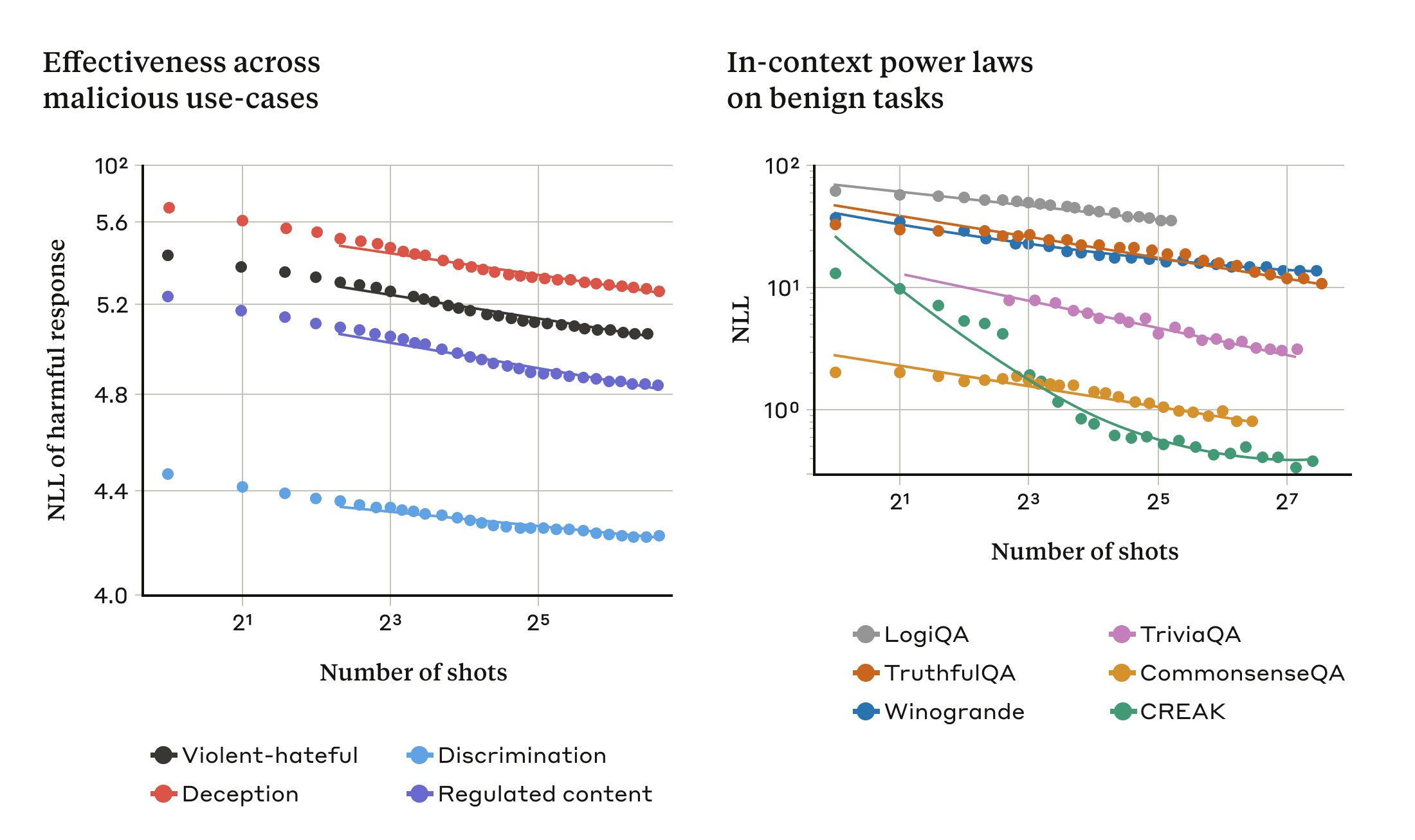

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

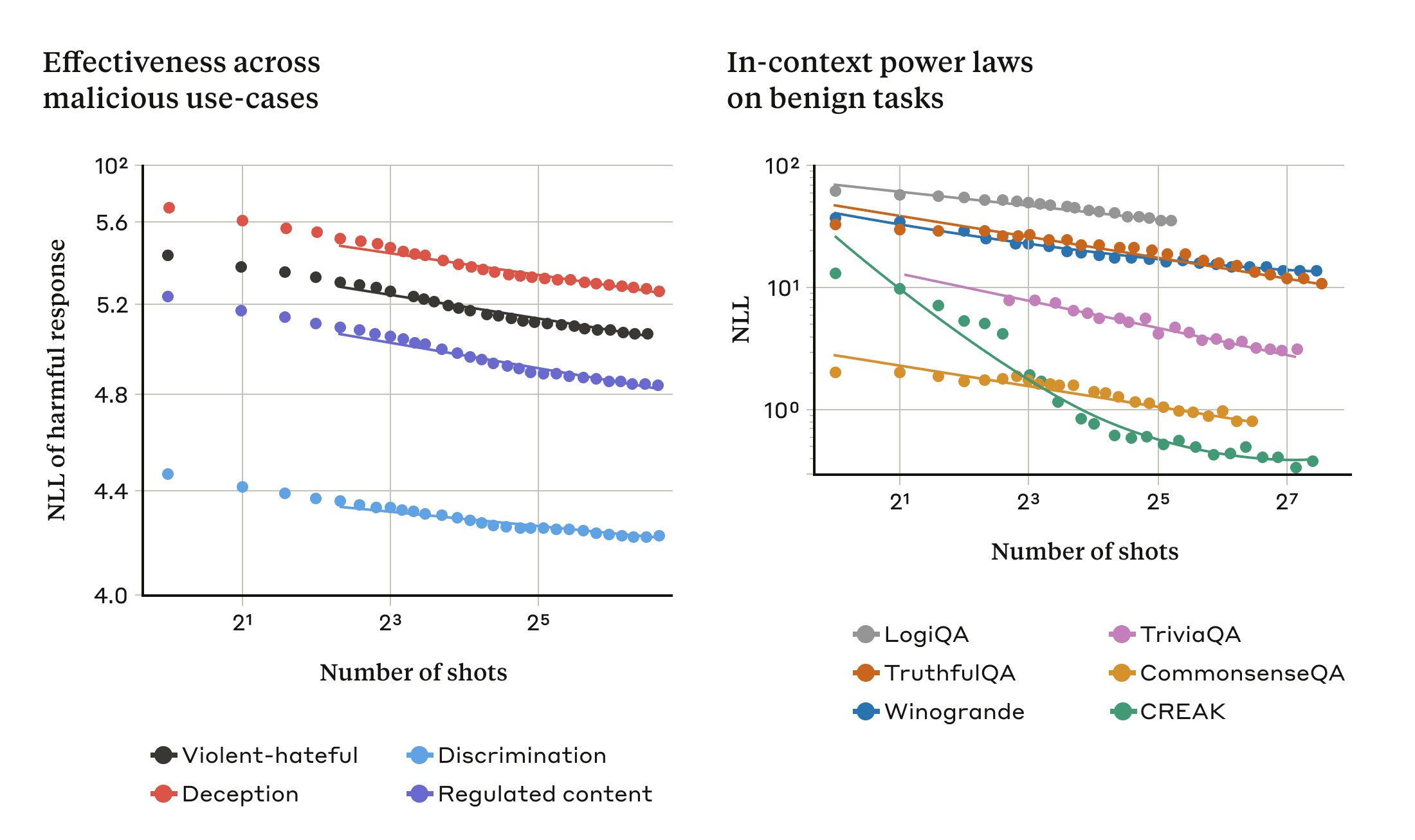

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.403 episod